Research

My research takes place in three ways: work in theoretical issues, designs and software achievements and finally, applications, notably in the fields of Humanities and Social Sciences and Health.

Theoretical work

Induction graphs

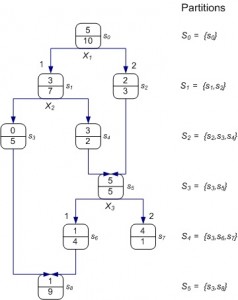

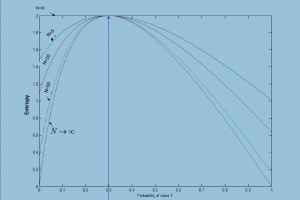

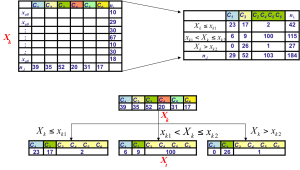

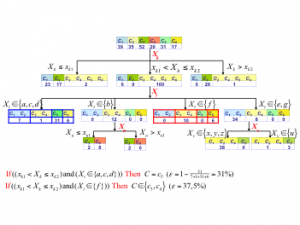

The induction graphs that generalize the decision trees. As an extension of my thesis work (1985), I proposed new algorithms for rule induction by lattice graphs and I proposed a family of entropy measures that are sensitive to the sample size. The reason is that classical decision trees are built through a process of recursive partitioning, most often leading to nodes at low number of individuals and, therefore, decision rules unreliable, so to models that generalize poorly. The first answer to this problem was made in the mid-1980s, by (Breiman et al.) with the procedures for tree pruning.

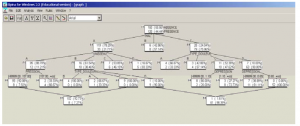

The induction graphs that generalize the decision trees. As an extension of my thesis work (1985), I proposed new algorithms for rule induction by lattice graphs and I proposed a family of entropy measures that are sensitive to the sample size. The reason is that classical decision trees are built through a process of recursive partitioning, most often leading to nodes at low number of individuals and, therefore, decision rules unreliable, so to models that generalize poorly. The first answer to this problem was made in the mid-1980s, by (Breiman et al.) with the procedures for tree pruning.  Our proposal to introduce lattice structures and new measures of partitioning can avoid this over-learning that requires pruning. We can see this contribution as a sort of pre-pruning with respect to previously proposed post-pruning.

Our proposal to introduce lattice structures and new measures of partitioning can avoid this over-learning that requires pruning. We can see this contribution as a sort of pre-pruning with respect to previously proposed post-pruning.

Discretization, groupement of modalities and recursive partitioning

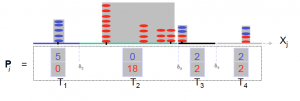

The recursive partitioning requires a discretization of continuous attributes. We have proposed new approaches that take into account various issues such as complexity of cutting, the statistical estimation of the breakpoints most likely, etc. This discretization returns, somehow, to reduce the number of branches possible for a graph of induction. As an extension, we have dealt with the same question on variables that may also have many modalities. And even beyond, why not extend these issues to the variable target (to be predict) that can be of any kind?

The recursive partitioning requires a discretization of continuous attributes. We have proposed new approaches that take into account various issues such as complexity of cutting, the statistical estimation of the breakpoints most likely, etc. This discretization returns, somehow, to reduce the number of branches possible for a graph of induction. As an extension, we have dealt with the same question on variables that may also have many modalities. And even beyond, why not extend these issues to the variable target (to be predict) that can be of any kind?  This work led us to propose a generalized form of recursive partitioning. This yielded a generic framework which allows the construction of induction graphs in a supervised or unsupervised, and that, whatever the type and number of predictor variables or predict. This is a generalization of decision trees. Recently, as part of the thesis of Vincent Pisetta (see last publications), we have developed work to use random forests to build kernel functions leading to classifiers outperform SVM.

This work led us to propose a generalized form of recursive partitioning. This yielded a generic framework which allows the construction of induction graphs in a supervised or unsupervised, and that, whatever the type and number of predictor variables or predict. This is a generalization of decision trees. Recently, as part of the thesis of Vincent Pisetta (see last publications), we have developed work to use random forests to build kernel functions leading to classifiers outperform SVM.

Topological graphs

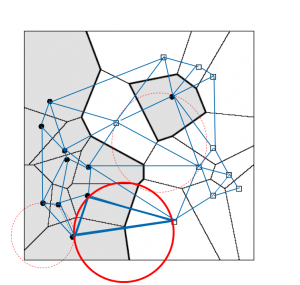

Among the “classifiers” most famous, the “k-nearest neighbors” (knn) have fared well. However, the binary relation of neighborhood induced knn, is not symmetrical, resulting in a disconnected graph. For this reason and many others that I set aside for lack of space, it seemed interesting to investigate more appropriate structures. For this, we used the geometric models such as Delaunay’s polyhedra, Gabriel’s graphs that better reflect the topology of the points of the training set and produce connected graphs where the relationship of neighborhood is always symmetrical. From there, we were able to answer a key problem in machine learning, namely the class separability. Indeed, if the classes were randomly distributed in the representation space, then there would be nothing to look for a learning algorithm, because the only model that would be induced would be too specific because of over-learning which means not best according to an oracle predicting a priori probability of class. By studying the geometric structure of these graphs, and guided the work of spatial statistics, such as the work of Cliff and Ord, we could establish the exact distribution of cut edges in case of random distribution of classes. An edge of the graph is cut if it connects two points of different classes. We proposed a statistical test. In addition to addressing the issue of class separability, we continue this work to find the best kernel functions, those that lead to a better class separability. This track seems particularly interesting for two reasons:

Among the “classifiers” most famous, the “k-nearest neighbors” (knn) have fared well. However, the binary relation of neighborhood induced knn, is not symmetrical, resulting in a disconnected graph. For this reason and many others that I set aside for lack of space, it seemed interesting to investigate more appropriate structures. For this, we used the geometric models such as Delaunay’s polyhedra, Gabriel’s graphs that better reflect the topology of the points of the training set and produce connected graphs where the relationship of neighborhood is always symmetrical. From there, we were able to answer a key problem in machine learning, namely the class separability. Indeed, if the classes were randomly distributed in the representation space, then there would be nothing to look for a learning algorithm, because the only model that would be induced would be too specific because of over-learning which means not best according to an oracle predicting a priori probability of class. By studying the geometric structure of these graphs, and guided the work of spatial statistics, such as the work of Cliff and Ord, we could establish the exact distribution of cut edges in case of random distribution of classes. An edge of the graph is cut if it connects two points of different classes. We proposed a statistical test. In addition to addressing the issue of class separability, we continue this work to find the best kernel functions, those that lead to a better class separability. This track seems particularly interesting for two reasons:

- It proposes extension to methods based on SVM (Support Vector Machines) particularly through the construction of new types of kernel function;

- It leads to a new vision of learning that makes the topological structure across central learning algorithm.

The mining complex data

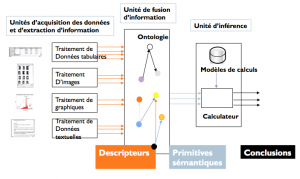

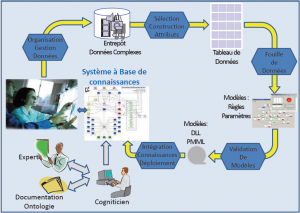

Techniques of data mining, which aims to exploit massive data to extract knowledge or information relevant to the user, are well suited to deal with tabular data described by pairs “attribute-value “. However, the data available in this format are only a small proportion, between 10% and 15%, of the numerical data. The real scientific and technological challenges are in mining complex data such as those on the web for example (text, images, videos). These complex data lend themselves less easily to the search and therefore require new approaches and tools. It is from this perspective that, from the early 2000s, I started work around this problem where I explored two ways: – Search by content in the complex data (Information Retrieval (IR), Organization and Indexing).

Techniques of data mining, which aims to exploit massive data to extract knowledge or information relevant to the user, are well suited to deal with tabular data described by pairs “attribute-value “. However, the data available in this format are only a small proportion, between 10% and 15%, of the numerical data. The real scientific and technological challenges are in mining complex data such as those on the web for example (text, images, videos). These complex data lend themselves less easily to the search and therefore require new approaches and tools. It is from this perspective that, from the early 2000s, I started work around this problem where I explored two ways: – Search by content in the complex data (Information Retrieval (IR), Organization and Indexing).  This is to find modes of representation for complex data in order to interrogate and navigate within. The fact of using geometric graphs offer, once the graph built, a strong potential in terms of navigation and information retrieval; – Inclusion of domain knowledge in mining complex data :

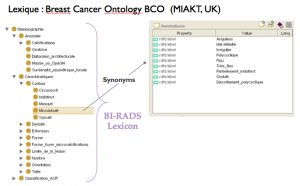

This is to find modes of representation for complex data in order to interrogate and navigate within. The fact of using geometric graphs offer, once the graph built, a strong potential in terms of navigation and information retrieval; – Inclusion of domain knowledge in mining complex data :  In the early work, we soon realized that the search of complex corpus could be improved by taking into account external information. This idea has been exploited to lead to strategies to take into account domain knowledge through ontologies.

In the early work, we soon realized that the search of complex corpus could be improved by taking into account external information. This idea has been exploited to lead to strategies to take into account domain knowledge through ontologies.

Software development

Since the beginning of my academic career, I chose to freely distribute my softwares. We were among the first in the world (1995) to put a data mining software on the Internet for free download; SIPINA, which is a data mining software by induction graphs. It has since been numerous versions and it was taken over completely within the platform Tanagra by a researcher in my laboratory; Tanagra [1] is now one of the flagship software of the domain.

Since the beginning of my academic career, I chose to freely distribute my softwares. We were among the first in the world (1995) to put a data mining software on the Internet for free download; SIPINA, which is a data mining software by induction graphs. It has since been numerous versions and it was taken over completely within the platform Tanagra by a researcher in my laboratory; Tanagra [1] is now one of the flagship software of the domain.

Applied work

Under contracts or ad hoc collaborations, I conducted numerous applied research mainly in two areas:

Under contracts or ad hoc collaborations, I conducted numerous applied research mainly in two areas:

- Health, as part of the complex data mining and its application to computer-aided diagnosis in breast cancer with the Centre Léon Bérard in Lyon, Medical Imaging Center of Clermont-Ferrand, society Fenics. I currently coordinating a European project “FLURESP” designed to model the phenomena of pandemic influenza. This project is funded European (DG SANCO) overall 700 000 euro and involves eight European teams.

- The humanities and social sciences through numerous collaborations involving applications including text mining and this with the Institute of Humanities of Lyon. Right now, we are working on opinion mining and social networks like Twitter.

[1] http://eric.univ-lyon2.fr/~ricco/tanagra/en/tanagra.html